Beat the ATS Systems

Smart Resume Builder

AI-optimized resumes that get past applicant tracking systems

6 min to read

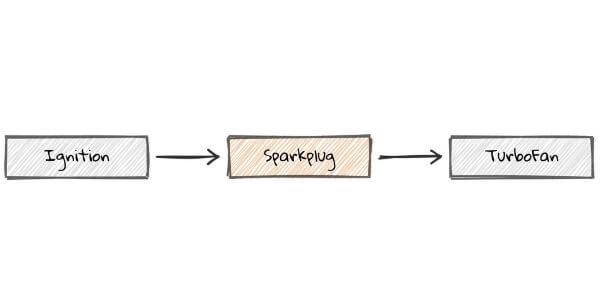

How is Google delivering a high-performance browser? Since the launch of Google Chrome in 2008, it has been considered speed one of the 4-basic principles for high performance. New Chrome 91 enhances the browser’s JavaScript processing and with the Sparkplug compiler, it simplifies the work. Nowadays, JavaScript is an important part of website design, but it could be a bottleneck for some browsers. In the last 3-years, Google used two new compilers like Turbofan and Ignition for the V8 engine in a 2-tier style.

Turbofan is a machine code author optimizing the code it outputs. It optimizes it with some information collected throughout its JavaScript execution pushing to a slower beginning but sooner code. Ignition is a faster bytecode interpreter that can get started speedily. Google-based V8 engine used to run around 78 years of JavaScript daily. But, a 23% speedup in performance has lowered this count by 17 years.

Sparkplug assists in compilation whenever we want to by allowing us to structure up the Sparkplug code. It does so in an aggressive manner then we can do in the TurboFan code. What could be those tricks making Sparkplug a speedy one? Firstly, Sparkplug compiles all of its functions and the Bytecode completes it. The Bytecode finishes almost all the hard work like looking out if parentheses are arrow functions or not, variable resolution, etc. So, Sparkplug does not specifically compile from JavaScript source, and it considers the bytecode.

Secondly, Sparkplug creates no intermediate representation (IR) as performed by a few compilers. Preferably, it directly compiles to the machine code in a single linear pass above the bytecode. Moreover, it emits code that matches the implementation of that specific bytecode.

The lack of intermediate representation states that the compiler has less optimized opportunity beyond local peephole optimizations. It refers that we have to port the complete implementation individually to every architecture we support as there is no intermediate architecture-independent stage left. However, it seems like neither of these is an issue – a fast compiler is a simple compiler to port the code easily. Furthermore, Sparkplug does not require any optimization as we already have a good optimizing compiler later on in the pipeline.

It seems a tough task to add a new compiler to an existing mature JavaScript VM. There are other things to support beyond basic execution. For example, a stack-walking CPU profiler, V8 has a debugger, integration into tier-up, stack traces for exceptions, and a lot more.

Sparkplug Compiler removes all these problems far away. It maintains “frames that are compatible with the interpreter.”

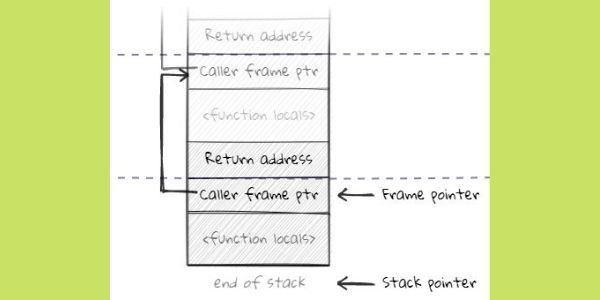

To dive more, let’s go into a flashback. A stack frame is a data frame that gets forced onto the stack, and whenever you call for a new function, it produces a new stack frame for the local variables of that function.

A stack frame is set by a frame pointer which states its start and a stack pointer mentioning its end:

The return address of the function has put on the stack whenever we call a function. The function pops this up when it comes back to find out where to return. When we make a new frame with the help of a function, it instantly saves the old frame pointer on the stack. Then, it sets the new frame pointer to the start of its stack frame. Therefore, the stack has a bunch of frame pointers, each marking the beginning of a frame pointing to the old one.

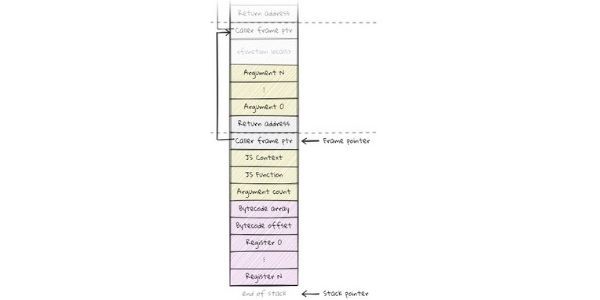

It represents a general layout of the stack for function types. You will see many conventions on how the function stores value in its entire frame and how it passes the arguments. This way, we find the JavaScript frames’ convention in V8 and push a few arguments further in reverse order on the stack before we call a function. Also, the first slots on the stack are the context it is known with and the current function that we call up.

This calling convention of JavaScript is distributed among interpreted and optimized frames. We get a permit to walk the stack with less overhead when code profiling is being done in the performance panel of the debugger.

The convention becomes more clear in the Ignition interpreter’s case. Ignition is a register-based interpreter meaning some virtual registers (do not mix it with machine registers) are storing the current interpreter’s state. These comprise JavaScript function locals and some temporary values. The interpreter stack frame has a space to store these registers. One can store it with a pointer to the bytecode array staying executed, and the current bytecode’s offset within that specific array:

Sparkplug purposely produces and operates a frame layout matching the interpreter’s frame. The Sparkplug also stores a register value when the interpreter stores one. There are different reasons for this:

The bytecode offset is not kept up to date during the execution of the Sparkplug code. These changes are for the interpreter stack frame. Rather, you can store a dual-way mapping from the Sparkplug code address range to the same bytecode offset. It’s visible in the latest executing instructions of mapping if a stack frame wants to know about the “bytecode offset” for a Sparkplug frame returning its corresponding offset. Furthermore, it’s visible through the recent bytecode offset in the mapping when we need to OSR from the interpreter to the Sparkplug and then jump to the corresponding Sparkplug instruction.

You can see that now we have a utilized slot on the stack frame where we can put the bytecode offset. We cannot easily eliminate it if we wish to keep that entire stack unchanged. So, we can re-purpose this stack slot for the recent executing function rather than catching the “feedback vector”. This vector stores the object shape data and demands load for some operations. Moreover, we have to be careful around OSR to ensure that we switch in either the correct feedback vector or the correct bytecode offset for this slot.

Also read: JavaScript VS Java: What makes them so different?

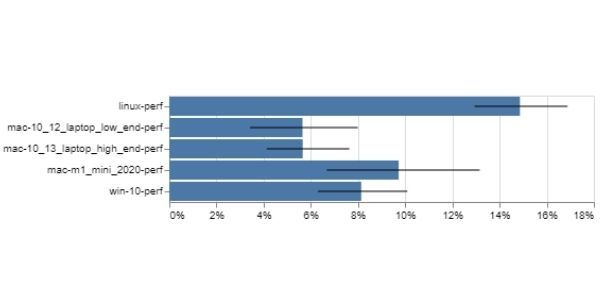

Speedometer refers to a standard trying to follow real-world website framework usage. It does so by making a TODO-list-tracking web application using some famous frameworks. Later, it performs stress-testing that application’s performance when adding or removing TODOs. It’s a good reflection of real-world loading and interacting behaviors. Plus, the real-world metrics reflect the improvements in Speedometer.

Sparkplug helps in improving the score of the Speedometer by 5-10% depending on the bot.

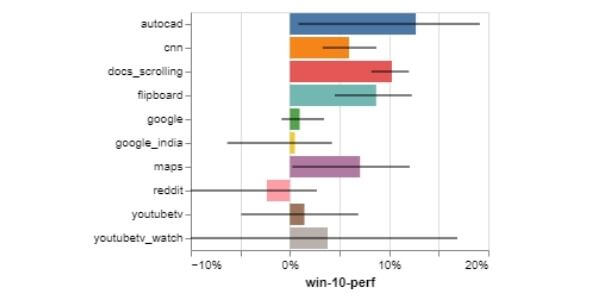

Speedometer is a good benchmark, yet telling only a story’s part. There are other sets of “browsing benchmarks” i.e recordings of a bunch of real websites that we can replay. These benchmarks script a little interaction and give a realistic look at how our different metrics behave in reality.

If you look at the “V8 main-thread time” metric on these browsing benchmarks, they measure the total time spent in V8 on the main thread. The V8 includes execution and compilation, and the main thread excludes streaming parsing or background-optimized compilation. It is the best way to see how well Sparkplug pays for itself during excluding other standard source noise. You get varied outcomes, but they seem great as we see improvements in the order by 5-15%.

Sparkplug gives a faster experience on the internet for its end-users. The V8 JavaScript engine by Google has brought an important change in the competition between browser makers. V8 has now allowed developers to write big browser applications in JavaScript and provided Google Chrome with a head start over other browsers. So, the above changes make the Chrome browser faster than before. Stay tuned for better performances like this to come!

Lastly, if you want to know more about new frameworks or technologies, we at Codersera have got your back.

Need expert guidance? Connect with a top Codersera professional today!