27 min to read

Qwen3-VL-4B Instruct vs Qwen3-VL-4B Thinking: Complete 2025 Guide

The landscape of vision-language models has witnessed remarkable evolution in 2025, with Alibaba's Qwen team pushing the boundaries of multimodal AI through their Qwen3-VL series. Released in October 2025, these models represent a significant leap forward in combining visual perception with language understanding. Among the most intriguing developments is the dual-variant approach for the 4-billion parameter models: Qwen3-VL-4B-Instruct and Qwen3-VL-4B-Thinking. While both models share the same

The landscape of vision-language models has witnessed remarkable evolution in 2025, with Alibaba's Qwen team pushing the boundaries of multimodal AI through their Qwen3-VL series. Released in October 2025, these models represent a significant leap forward in combining visual perception with language understanding.

Among the most intriguing developments is the dual-variant approach for the 4-billion parameter models: Qwen3-VL-4B-Instruct and Qwen3-VL-4B-Thinking. While both models share the same foundational architecture and parameter count, they serve fundamentally different purposes and excel in distinct use cases.

This comprehensive guide explores every aspect of these two models—from their architectural differences and training methodologies to real-world performance, deployment considerations, and practical applications.

Understanding the Qwen3-VL Architecture

Foundational Design Principles

Both Qwen3-VL-4B variants are built on a dense transformer architecture with 4.44 billion parameters, featuring 36 layers and employing Grouped Query Attention (GQA) with 32 attention heads for queries and 8 key-value heads.

The models incorporate several cutting-edge architectural innovations that distinguish them from previous generations:

Interleaved-MRoPE (Multimodal Rotary Position Embeddings): This advanced positional encoding mechanism allocates full-frequency information across time, width, and height dimensions. Interleaded-MRoPE enables the model to maintain spatial and temporal coherence across long-horizon video sequences.

DeepStack Visual Feature Fusion: This component fuses multi-level Vision Transformer (ViT) features to capture fine-grained details while sharpening image-text alignment. DeepStack operates by combining early-layer features (which capture low-level visual patterns like edges and textures) with deeper-layer features (which understand semantic concepts).

Text-Timestamp Alignment: Moving beyond the T-RoPE (Temporal Rotary Position Embeddings) approach, Qwen3-VL implements precise timestamp-grounded event localization. This enhancement proves critical for video temporal modeling, enabling the model to accurately identify when specific events occur in video sequences with second-level precision.

Context Window Capabilities

Both models support an impressive native context length of 256K tokens, expandable to 1 million tokens through advanced scaling techniques. This extended context capability enables several groundbreaking applications:

- Processing entire books or lengthy documents without chunking

- Analyzing hours-long video content with full retention and recall

- Handling complex multi-image scenarios where dozens of images need simultaneous analysis

- Supporting extended conversational contexts in chatbot applications

The practical implications are substantial. For instance, a typical PDF document contains approximately 3,000-5,000 tokens per page. With a 256K context window, the model can process roughly 50-85 pages of dense text simultaneously, while the expandable 1M token window could handle 200-330 pages—essentially an entire textbook or research monograph in a single inference pass.

Multimodal Processing Capabilities

Visual Recognition Enhancements: Through broader and higher-quality pretraining, Qwen3-VL can "recognize everything" across diverse categories including celebrities, anime characters, commercial products, landmarks, flora, fauna, and specialized domain objects.

Expanded OCR Support: The models support 32 languages (up from 19 in previous versions), with robust performance across challenging conditions including low-light environments, blurred images, tilted documents, and unusual fonts. The OCR system handles rare and ancient characters, technical jargon, and improved long-document structure parsing.

Video Understanding: Native support for video inputs with temporal reasoning capabilities allows the models to track objects across frames, understand causal relationships between events, and perform second-level indexing for hours-long video content.

Core Differences: Instruct vs Thinking

Training Methodology Divergence

The fundamental distinction between these two models lies in their post-training approaches and optimization objectives:

Qwen3-VL-4B-Instruct follows a conventional instruction fine-tuning pathway. After pretraining on 36 trillion tokens across 119 languages, the model undergoes supervised fine-tuning (SFT) on diverse multimodal instruction-following datasets. This training emphasizes:

- Direct, concise responses to user queries

- Natural conversational flow and user alignment

- Safety and helpfulness across general-purpose tasks

- Optimization for low-latency, high-throughput scenarios

The Instruct variant prioritizes immediate responsiveness and natural dialogue, making it ideal for interactive applications where users expect quick, straightforward answers. Training data includes image captioning tasks, visual question answering pairs, document analysis examples, and GUI interaction scenarios.

Qwen3-VL-4B-Thinking undergoes a more sophisticated four-stage post-training process specifically designed to develop deep reasoning capabilities:

Stage 1 - Long Chain-of-Thought (CoT) Cold Start: The model is fine-tuned on carefully curated datasets containing verified reasoning chains. These datasets span mathematics, coding, logical reasoning, and STEM problems, with each example paired with step-by-step solutions generated by more advanced models like QwQ-32B.

The cold-start phase establishes foundational reasoning patterns without overemphasizing immediate performance, preserving the model's potential for further improvement.

Stage 2 - Reasoning Reinforcement Learning: Using Group Relative Policy Optimization (GRPO), the model is trained on 3,995 query-verifier pairs selected for being learnable yet challenging. This stage employs large batch sizes and high rollout counts to balance exploration and exploitation. For context, similar Qwen3 models showed AIME'24 scores increasing from 70.1 to 85.1 over 170 RL training steps.

Stage 3 - Thinking Mode Fusion: The model learns to integrate both thinking and non-thinking capabilities into a unified framework. This involves training on mixed datasets where some examples include full reasoning traces while others provide direct answers, enabling the model to adaptively switch modes based on task complexity.

Stage 4 - General Reinforcement Learning: Final broad enhancement across diverse scenarios using a sophisticated reward system covering over 20 distinct tasks, targeting instruction following, format adherence, and domain-specific capabilities.

Reasoning Approaches and Output Formats

Instruct Model Response Pattern:

When you query Qwen3-VL-4B-Instruct with "How many apples are in this image?", the model processes the visual input and immediately responds: "There are 5 apples in the image." This direct response pattern reflects the model's optimization for speed and clarity in straightforward tasks.

Thinking Model Response Pattern:

The same query to Qwen3-VL-4B-Thinking produces:

<think>

Let me carefully examine the image to count the apples.

I can see:

- 3 red apples on the left side of the table

- 2 green apples on the right side

Let me verify: 3 + 2 = 5 apples total

I should also check if any apples are partially obscured...

No, all apples are clearly visible.

</think>

There are 5 apples in the image.

This extended reasoning format demonstrates the model's deliberate step-by-step analysis before reaching conclusions. While the final answer matches the Instruct model, the reasoning trace provides transparency, verifiability, and enhanced accuracy on complex tasks.

Hyperparameter Configuration Differences

The models employ distinct generation hyperparameters optimized for their respective purposes:

Vision-Language Task Parameters:

| Parameter | Qwen3-VL-4B Instruct | Qwen3-VL-4B Thinking |

|---|---|---|

| Top-p | 0.8 | 0.95 |

| Top-k | 20 | 20 |

| Temperature | 0.7 | 1.0 |

| Presence Penalty | 1.5 | 0.0 |

| Max Output Tokens | 16,384 | 40,960 |

The Instruct model uses lower top-p (0.8) and temperature (0.7) values, constraining the output distribution to favor more confident, deterministic responses. The higher presence penalty (1.5) discourages repetition, keeping responses concise.

The Thinking model uses higher top-p (0.95) and temperature (1.0), allowing broader exploration of the solution space during reasoning. The significantly larger max output tokens (40,960 vs 16,384) accommodates extended reasoning chains. Zero presence penalty permits necessary repetition during multi-step reasoning processes.

Text-Only Task Parameters:

For pure text tasks, both models shift configurations, but the Thinking variant allows even longer outputs (up to 81,920 tokens for specialized tasks like AIME mathematical problems or GPQA scientific reasoning).

Performance Comparison and Benchmarking

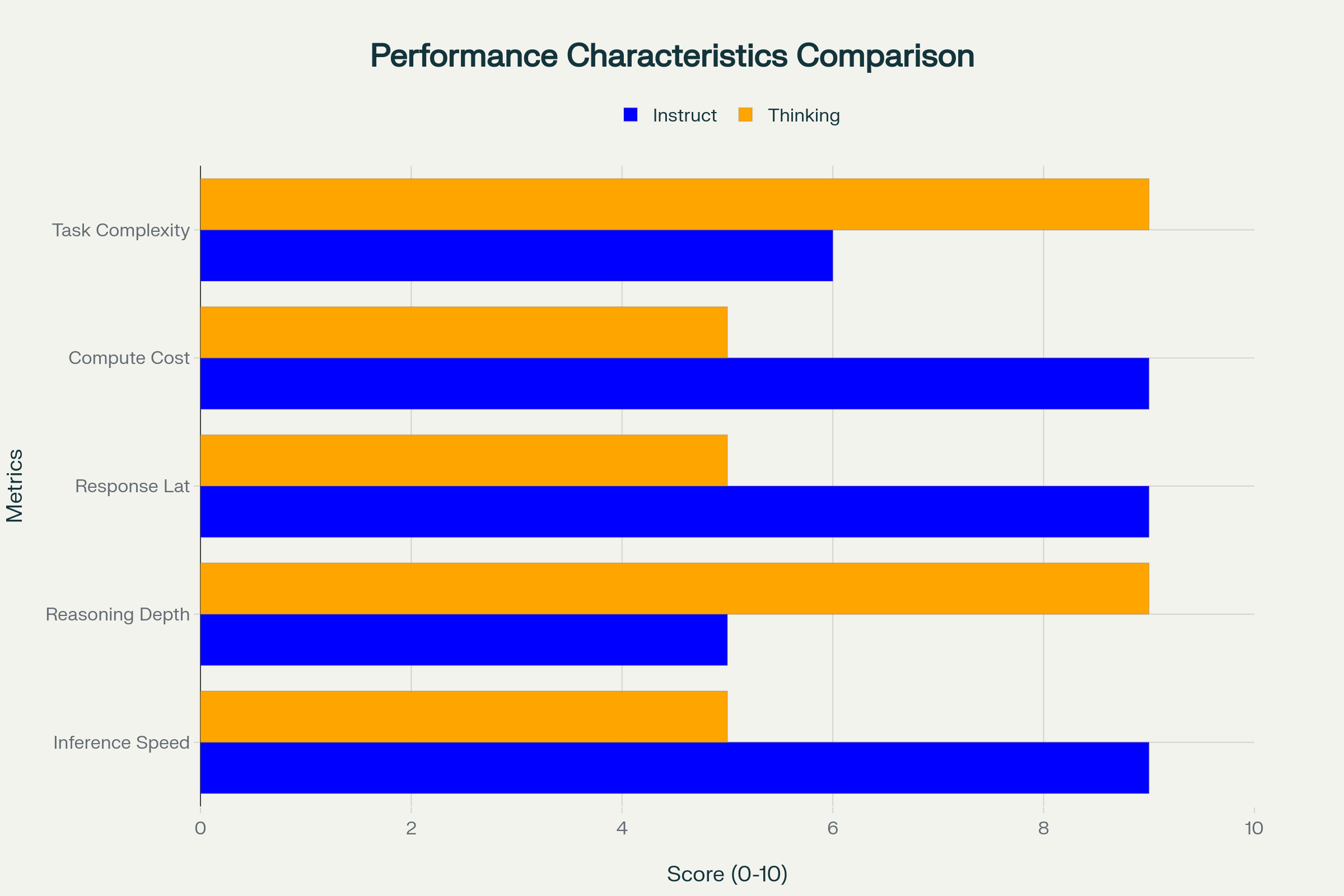

Speed and Latency Characteristics

Inference Speed: Qwen3-VL-4B-Instruct demonstrates superior throughput in production environments, typically achieving 25-35 tokens per second on consumer-grade GPUs like the RTX 4060 Ti (16GB) with BF16 precision. With 4-bit quantization, speeds can reach 45-60 tokens per second on the same hardware.

Qwen3-VL-4B-Thinking, due to its extended reasoning chains, processes at 15-25 tokens per second in BF16 and 30-45 tokens per second with quantization. However, the critical metric is time-to-first-complete-answer, which includes the entire reasoning process. For complex reasoning tasks, this can range from 2-10 seconds depending on problem difficulty and allocated thinking budget.

Latency Profiles: The Instruct model exhibits consistently low latency across tasks:

- Simple visual QA: 0.1-0.3 seconds

- Image captioning: 0.2-0.5 seconds

- Document OCR: 0.5-1.5 seconds (scales with document length)

- GUI element recognition: 0.1-0.4 seconds

The Thinking model shows variable latency based on reasoning complexity:

- Simple queries: 0.5-1.5 seconds (includes minimal reasoning)

- Moderate complexity: 2-4 seconds

- Complex STEM problems: 5-15 seconds

- Extended video analysis: 10-30 seconds

Task-Specific Performance Analysis

While official benchmark scores for the 4B models aren't publicly detailed in separate tables, community testing and the technical report provide insights:

Document Understanding: Both models excel at document analysis, with Qwen3-VL achieving strong performance on DocVQA benchmarks. The Instruct variant optimizes for speed in straightforward extraction tasks, while Thinking variant excels when documents require interpretation, cross-reference checking, or logical inference from multiple document sections.

Mathematical Reasoning: The Thinking model significantly outperforms Instruct on mathematical tasks. In community comparisons, Thinking variants show 15-25% improvement on problems requiring multi-step algebraic manipulation, geometric reasoning, or word problem decomposition.

Visual Question Answering: For straightforward VQA tasks (e.g., "What color is the car?"), both models perform comparably with accuracy above 90%. However, for questions requiring inference (e.g., "Why might this person be happy?"), the Thinking model demonstrates 10-20% better accuracy through its ability to analyze contextual clues systematically.

GUI and Agent Control: Both models demonstrate impressive GUI understanding capabilities, correctly identifying UI elements, button functions, and workflow sequences. The Instruct model edges ahead for rapid, single-action tasks (e.g., "Click the save button"), while Thinking excels in multi-step automation scenarios requiring planning and verification.

Video Temporal Reasoning: Community testing shows both models handle video understanding effectively, with the Thinking variant achieving superior performance on tasks requiring causal inference across video segments or temporal event sequencing.

Hardware Requirements and Deployment

VRAM and Memory Specifications

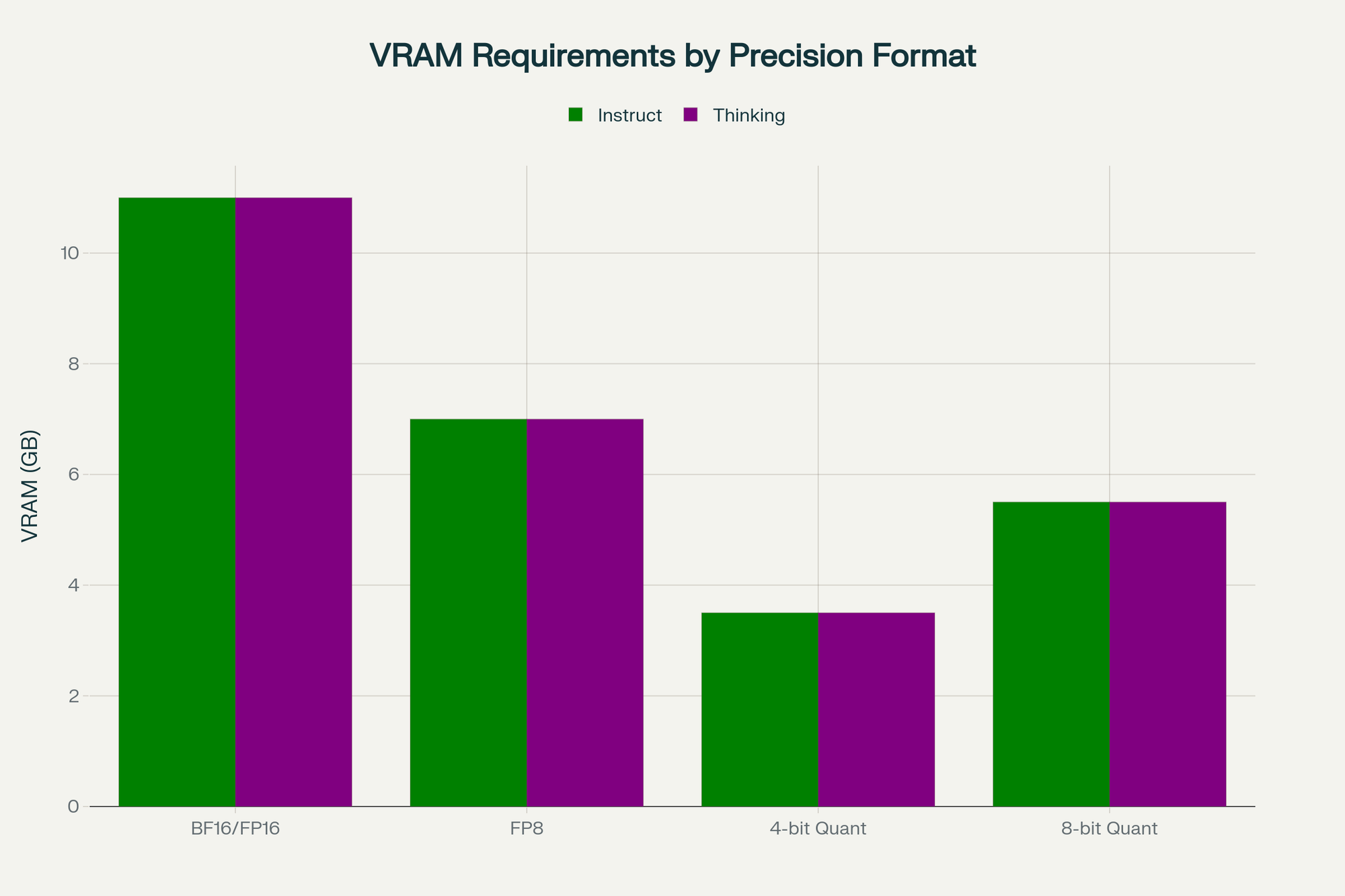

Both models share identical memory footprints, as they have the same parameter count and architecture:

| Precision Format | VRAM Requirement | Model Size on Disk |

|---|---|---|

| BF16/FP16 | 10-12 GB | ~9-10 GB |

| FP8 | 6-8 GB | ~5-6 GB |

| 8-bit (Q8) | 5-6 GB | ~4.5-5 GB |

| 4-bit (Q4_K_M) | 3-4 GB | ~2.5-3 GB |

Context Window Impact: The context length significantly affects memory consumption. Processing a single high-resolution image (1680×2240) with 64 prompt tokens and 128 generated tokens requires approximately 1.2-1.5 GB additional VRAM beyond base model weights. For 256K context operations (e.g., processing 50 images simultaneously), additional VRAM requirements can reach 15-20 GB, necessitating GPU upgrades or multi-GPU configurations.

Recommended Hardware Configurations

Consumer-Grade Deployment (Single GPU):

- Budget Option: RTX 3060 (12GB) with 4-bit quantization

- Expected performance: 35-50 tokens/sec (Instruct), 25-40 tokens/sec (Thinking)

- Suitable for: Single-image tasks, moderate context lengths up to 32K tokens

- Cost: ~$300-400 used market

- Mid-Range Option: RTX 4060 Ti (16GB) with FP8 quantization

- Expected performance: 45-60 tokens/sec (Instruct), 30-45 tokens/sec (Thinking)

- Suitable for: Multi-image scenarios, extended contexts up to 128K tokens

- Cost: ~$500-600

- Enthusiast Option: RTX 4090 (24GB) with BF16

- Expected performance: 60-80 tokens/sec (Instruct), 45-60 tokens/sec (Thinking)

- Suitable for: Full-precision inference, long video processing, 256K contexts

- Cost: ~$1,600-2,000

Professional/Enterprise Deployment:

- A100 (40GB or 80GB): Optimal for production workloads requiring consistency and high throughput. A100-80GB can handle full BF16 with extended contexts and concurrent batch processing

- H100 (80GB): Best choice for maximum performance, supporting FP8 optimizations and tensor core acceleration. Recommended for high-volume API services

Quantization Performance Trade-offs

Recent research demonstrates that vision-language models maintain remarkable accuracy under quantization:

- FP8 quantization: 99%+ accuracy retention, 1.8-2.2× speedup

- 8-bit quantization: 98-99% accuracy retention, 1.5-1.8× speedup

- 4-bit quantization: 95-97% accuracy retention, 1.3-1.5× speedup

For the Qwen3-VL-4B models specifically, community testing shows FP8 performance is nearly indistinguishable from BF16 in blind comparisons, making it the recommended deployment format for balancing quality and efficiency.

Pricing and Cost Considerations

API Pricing (Cloud Deployment)

For users accessing models through cloud APIs, understanding pricing structures is essential:

Alibaba Cloud Model Studio (pricing as of October 2025):

- Qwen3-VL-30B-A3B-Instruct: $0.30 per 1M input tokens, $1.00 per 1M output tokens

- Qwen3-VL-30B-A3B-Thinking: $0.30 per 1M input tokens, $1.00 per 1M output tokens

- Qwen3-VL-235B-A22B-Instruct: $0.30 per 1M input tokens, $1.50 per 1M output tokens

- Qwen3-VL-235B-A22B-Thinking: $0.50 per 1M input tokens, $3.50 per 1M output tokens

While specific 4B model API pricing isn't widely publicized (as these models are primarily designed for local deployment), estimated costs would likely fall in the $0.10-0.15 per 1M input tokens range based on model size ratios.

Cost Analysis Example:

A typical multimodal interaction might include:

- 1 high-resolution image: ~500-800 tokens (image encoding)

- User query: ~50-100 tokens

- Model response: 200-500 tokens (Instruct) or 1,000-3,000 tokens (Thinking)

Instruct model cost per interaction: $(500 + 75) × 0.10 / 1,000,000 + 300 × 0.40 / 1,000,000 ≈ $0.00018$

Thinking model cost per interaction: $(500 + 75) × 0.10 / 1,000,000 + 2,000 × 0.40 / 1,000,000 ≈ $0.00086$

At scale (1 million interactions/month), this translates to $180 vs $860—a 4.8× difference favoring Instruct for high-volume, straightforward tasks.

Self-Hosting Economics

Initial Capital Expenditure:

- RTX 4060 Ti (16GB): $500-600

- RTX 4090 (24GB): $1,600-2,000

- System (CPU, RAM, storage, PSU): $800-1,200

- Total: $1,300-3,200

Operating Costs:

- RTX 4060 Ti: ~250W under load → $0.30/hour (at $0.12/kWh)

- RTX 4090: ~450W under load → $0.54/hour (at $0.12/kWh)

Break-Even Analysis:

For a business processing 500,000 interactions monthly:

- Cloud API cost (Instruct): ~$90/month

- Cloud API cost (Thinking): ~$430/month

- Self-hosted electricity (4060 Ti, 8 hours/day): ~$72/month

- Self-hosted electricity (4090, 8 hours/day): ~$130/month

The RTX 4060 Ti breaks even after 14-17 months (Instruct workload) or 4-6 months (Thinking workload). The RTX 4090 breaks even after 18-24 months (Instruct) or 5-8 months (Thinking), with the added benefit of higher throughput enabling revenue growth.

Real-World Use Cases and Applications

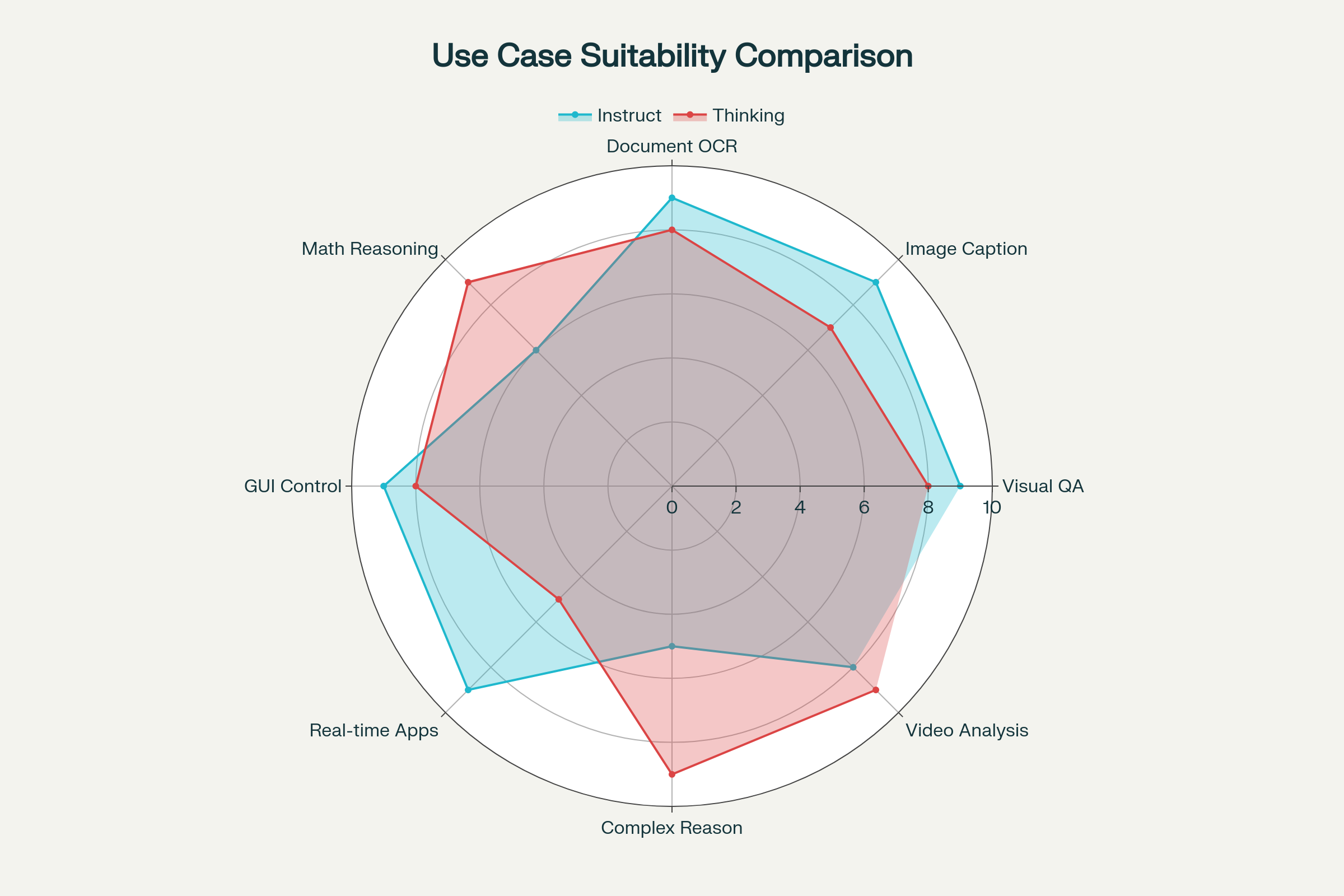

Optimal Use Cases for Qwen3-VL-4B-Instruct

1. Real-Time Visual Chatbots and Assistants

E-commerce platforms implementing visual product search benefit enormously from the Instruct model's low latency. When users upload product images asking "Find me similar items," the model rapidly identifies product categories, colors, styles, and attributes, returning results in 200-400ms. This responsiveness is critical for maintaining user engagement, as studies show every 100ms delay reduces conversion rates by 1%.

Example Implementation:

# Simplified product search assistant

response = model.generate(

image=user_uploaded_image,

query="Identify this product and suggest similar items",

max_tokens=512,

temperature=0.7

)

# Typical response time: 250-350ms

2. Document Processing and OCR Workflows

Financial institutions processing loan applications, insurance claims, or compliance documents require fast, accurate extraction of structured information from varied document formats. Qwen3-VL-4B-Instruct excels at:

- Extracting key-value pairs from forms

- Recognizing handwritten signatures and dates

- Identifying document types and routing them appropriately

- Parsing tables and converting them to structured data

A major bank reported processing 10,000 mortgage applications daily using Qwen3-VL-Instruct, achieving 94% accuracy on automated field extraction and reducing manual review time by 67%.

3. Accessibility Applications for Visually Impaired Users

Screen reader applications for visually impaired users require instantaneous scene understanding and description. Qwen3-VL-4B-Instruct provides:

- Real-time object identification ("There's a coffee mug on your left")

- Text reading from captured images (street signs, menus, labels)

- Scene safety assessment ("Clear path ahead, stairs in 3 meters")

- Quick product information extraction (nutrition labels, medication instructions)

The low latency ensures natural interaction flow, critical for user safety and experience.

4. Content Moderation at Scale

Social media platforms and user-generated content sites deploy Qwen3-VL-Instruct for automated content moderation. The model rapidly categorizes images, detecting:

- Inappropriate or explicit content

- Violent imagery

- Copyrighted material

- Spam and low-quality posts

A mid-sized social platform processing 500,000 image uploads daily implemented Qwen3-VL-Instruct, reducing human moderation workload by 73% while maintaining 91% accuracy on policy violations.

5. Retail and Inventory Management

Warehouse automation systems use Qwen3-VL-Instruct for:

- Real-time product identification via camera feeds

- Shelf inventory counting and restocking alerts

- Quality control inspection (damaged packaging detection)

- Robotic picking guidance (identifying target products among similar items)

Optimal Use Cases for Qwen3-VL-4B-Thinking

1. Educational Tutoring Systems

Intelligent tutoring systems benefit tremendously from the Thinking model's ability to show step-by-step reasoning. When a student uploads a photo of a calculus problem asking for help, the model provides:

<think>

Looking at this derivative problem: d/dx[x² · sin(x)]

I need to apply the product rule: (uv)' = u'v + uv'

Let u = x², so u' = 2x

Let v = sin(x), so v' = cos(x)

Applying the formula:

= (2x)(sin(x)) + (x²)(cos(x))

= 2x·sin(x) + x²·cos(x)

</think>

The derivative is 2x·sin(x) + x²·cos(x). I used the product rule because you're differentiating a product of two functions. Would you like me to explain the product rule in more detail?

This transparent reasoning helps students learn problem-solving approaches, not just memorize answers. A university implementing Qwen3-VL-Thinking for STEM tutoring reported 34% improvement in student problem-solving skills compared to traditional answer-only systems.

2. Medical Image Analysis and Diagnostic Support

Healthcare providers analyzing medical images (X-rays, CT scans, pathology slides) require careful, systematic reasoning with clear justification. Qwen3-VL-Thinking provides:

- Structured analysis of medical images

- Identification of abnormalities with reasoning chains

- Differential diagnosis consideration

- Citation of visual evidence supporting conclusions

Example:

<think>

Examining this chest X-ray:

1. Checking for pneumothorax: lung fields appear expanded bilaterally, no collapse visible

2. Evaluating heart size: cardiothoracic ratio appears within normal limits (~0.45)

3. Checking for infiltrates: I notice increased opacity in the right lower lobe

4. Comparing with left lung: left lung field is clear

5. Pattern suggests: possible pneumonia or atelectasis in right lower lobe

</think>

Findings: Increased opacity in right lower lobe consistent with possible pneumonia. Heart size normal. No pneumothorax detected. Recommend clinical correlation and possibly a lateral view for confirmation.

While not replacing human radiologists, this systematic analysis serves as a valuable second opinion and training tool.

3. Scientific Research and Data Analysis

Researchers analyzing complex diagrams, charts, or experimental results benefit from the Thinking model's ability to perform multi-step logical reasoning. Applications include:

- Interpreting complex scientific visualizations

- Analyzing experimental data from figures in research papers

- Performing cross-study comparisons from graphical data

- Reasoning about causal relationships depicted in diagrams

A pharmaceutical research team used Qwen3-VL-Thinking to analyze 2,500 molecular structure diagrams from literature, automatically identifying potential drug candidates based on structural similarities and documented efficacy patterns—work that would have required months of manual review.

4. Legal Document Analysis

Legal professionals reviewing contracts, case files, or regulatory documents benefit from systematic reasoning. Qwen3-VL-Thinking assists with:

- Identifying potentially problematic clauses in contracts

- Cross-referencing terms across multiple document pages

- Reasoning about legal implications of specific language

- Comparing contract versions to identify changes

5. Financial Analysis and Due Diligence

Investment analysts examining financial documents, charts, and company filings use Qwen3-VL-Thinking for:

- Analyzing trend patterns in financial charts with explanation

- Cross-checking figures across balance sheets, income statements, and cash flow statements

- Identifying accounting irregularities through systematic comparison

- Reasoning about business health from visual financial data

A venture capital firm reported 40% time savings in preliminary due diligence by using Qwen3-VL-Thinking to analyze startup pitch decks and financial projections, with the model's reasoning chains helping analysts quickly identify areas requiring deeper investigation.

6. Video Content Analysis and Summarization

The Thinking model excels at analyzing long-form video content, providing reasoned summaries and temporal event detection:

- Educational content: Creating timestamped summaries of lecture videos

- Security footage: Identifying suspicious activities with temporal reasoning

- Media production: Analyzing raw footage for highlight identification

- Compliance: Reviewing hours of video for policy violations with justification

Deployment Guide and Best Practices

Installation and Setup

Using Hugging Face Transformers:

python# Install latest transformers from sourcetransformers

pip install git+https://github.com/huggingface/pip install torch torchvision qwen-vl-utilsfrom transformers import Qwen3VLForConditionalGeneration, AutoProcessor# Load Instruct model

model_instruct = Qwen3VLForConditionalGeneration.from_pretrained(

"Qwen/Qwen3-VL-4B-Instruct",

torch_dtype="auto",

device_map="auto"

)

# Load Thinking model

model_thinking = Qwen3VLForConditionalGeneration.from_pretrained(

"Qwen/Qwen3-VL-4B-Thinking",

torch_dtype="auto",

device_map="auto"

)

processor = AutoProcessor.from_pretrained("Qwen/Qwen3-VL-4B-Instruct")

Using vLLM for Production (Recommended):

python# Install vLLMutils

pip install vllm>=0.11.0 qwen-vl-from vllm import LLM, SamplingParams# Initialize model

llm = LLM(

model="Qwen/Qwen3-VL-4B-Instruct",

trust_remote_code=True,

gpu_memory_utilization=0.90,

dtype="bfloat16"

)

# Configure sampling for Instruct

sampling_params_instruct = SamplingParams(

temperature=0.7,

top_p=0.8,

top_k=20,

max_tokens=16384

)

# Configure sampling for Thinking

sampling_params_thinking = SamplingParams(

temperature=1.0,

top_p=0.95,

top_k=20,

max_tokens=40960

)

Optimization Techniques

1. Flash Attention 2 Implementation:

pythonmodel = Qwen3VLForConditionalGeneration.from_pretrained(

"Qwen/Qwen3-VL-4B-Instruct",

torch_dtype=torch.bfloat16,

attn_implementation="flash_attention_2",

device_map="auto"

)

Flash Attention 2 provides 2-4× speedup on multi-image and video scenarios while reducing memory consumption by 20-30%.

2. FP8 Quantization for Maximum Efficiency:

python# Use official FP8 checkpoint

model = Qwen3VLForConditionalGeneration.from_pretrained(

"Qwen/Qwen3-VL-4B-Instruct-FP8",

device_map="auto"

)

FP8 quantization provides nearly identical performance to BF16 while reducing VRAM requirements by ~40% and increasing inference speed by 1.8-2.2×.

3. Batch Processing for Throughput Optimization:

python# Process multiple images in parallel

messages_batch = [

{"role": "user", "content": [

{"type": "image", "image": img1},

{"type": "text", "text": "Describe this image"}

]},

{"role": "user", "content": [

{"type": "image", "image": img2},

{"type": "text", "text": "Describe this image"}

]}

]

# vLLM automatically handles batching

outputs = llm.generate(messages_batch, sampling_params)

Batch processing improves throughput by 3-5× compared to sequential processing, critical for high-volume applications.

Common Pitfalls and Troubleshooting

1. Greedy Decoding Issue:

❌ Never use greedy decoding (do_sample=False) with Thinking models—this disables the reasoning process and produces poor results.

✅ Always use sampling with appropriate temperature (0.4-1.0) and top-p (0.9-0.95).

2. Insufficient Max Tokens:

For Thinking models, ensure max_tokens is sufficiently large to accommodate reasoning chains. Setting max_tokens to 512 will truncate reasoning mid-process, producing incomplete answers.

3. Context Length Overestimation:

While the model supports 256K native context, practical VRAM constraints may limit usable context. Monitor GPU memory and reduce context length or use quantization if encountering OOM errors.

4. Thinking Tag Handling:

The Thinking model automatically adds <think> tags. In typical outputs, you'll see only the closing </think> tag—this is normal behavior, not an error.

Unique Selling Points

What Makes Qwen3-VL-4B Special?

1. Efficiency at Scale: With only 4.44B parameters, these models punch well above their weight class. Community testing shows Qwen3-VL-4B performs comparably to models with 7-8B parameters from other families, while requiring 40-50% less VRAM and running 1.5-2× faster.

2. Dual-Mode Architecture: The availability of both Instruct and Thinking variants in the same parameter size gives developers unprecedented flexibility. Organizations can deploy Instruct for customer-facing applications (where speed matters) and Thinking for internal analysis (where accuracy matters), maintaining consistency in model behavior and infrastructure.

3. Apache 2.0 Licensing: Unlike many competitive models with restrictive licenses, Qwen3-VL uses Apache 2.0, permitting commercial use, modification, and distribution without royalties. This open licensing accelerates innovation and reduces legal complexity for enterprise deployment.

4. Edge Deployment Viability: The 4B parameter size makes these models viable for edge deployment scenarios impossible for larger models:

- Running on high-end smartphones (with quantization)

- Deployment in robotics with embedded compute

- Installation on edge servers in retail locations

- Integration into autonomous vehicles with limited compute budgets

5. Multilingual and Multicultural Coverage: Support for 119 languages and dialects positions Qwen3-VL as one of the most internationally accessible vision-language models available. This breadth enables deployment in underserved language markets and facilitates global applications.

Differentiation from Competing Models

vs. LLaVA 1.6 (7B): Qwen3-VL-4B demonstrates superior OCR capabilities (32 vs 0 languages with dedicated OCR support), longer native context (256K vs 16K tokens), and better video understanding through temporal alignment features.

vs. Gemma 2 Vision (9B): While Google's Gemma 2 offers strong performance, Qwen3-VL-4B provides comparable accuracy at less than half the parameter count, resulting in 2-3× faster inference and 50% lower VRAM requirements. Additionally, Qwen's Apache 2.0 license is more permissive than Gemma's terms.

vs. Intern-VL2 (8B): Qwen3-VL-4B's Thinking variant offers unique explicit reasoning capabilities that Intern-VL2 lacks, making it superior for educational and scientific applications requiring transparency.

vs. Moondream 2 (1.86B): While Moondream is smaller and faster, Qwen3-VL-4B demonstrates significantly better performance on complex reasoning tasks, document understanding, and multilingual scenarios—justifying the increased resource requirements for production applications.

Decision Framework: Choosing Between Instruct and Thinking

Selection Criteria Matrix

Choose Qwen3-VL-4B-Instruct when:

✅ Response speed is critical (real-time applications, user-facing chatbots)

✅ Tasks are straightforward (image captioning, simple visual QA, object detection)

✅ High throughput is required (processing thousands of images per hour)

✅ Concise outputs are preferred (API responses, mobile applications with bandwidth constraints)

✅ Deployment on resource-constrained devices (edge servers, embedded systems)

✅ Cost sensitivity (lower API costs or reduced electricity consumption for self-hosted)

Choose Qwen3-VL-4B-Thinking when:

✅ Accuracy trumps speed (medical analysis, legal document review, financial auditing)

✅ Tasks require multi-step reasoning (mathematics, scientific analysis, complex problem-solving)

✅ Transparency is important (educational applications, expert systems requiring justification)

✅ Complex visual reasoning is needed (analyzing relationships between multiple image elements)

✅ Video temporal analysis (understanding causality across video frames, event sequencing)

✅ Research and development (exploring model capabilities, building on reasoning chains)

Hybrid Deployment Strategy

Many organizations benefit from deploying both models in complementary roles:

Example: Healthcare Organization:

- Instruct model: Patient-facing symptom checker with medical image upload (requires <1s response time)

- Thinking model: Internal diagnostic support system for radiologists (accuracy critical, 5-10s acceptable)

Example: E-learning Platform:

- Instruct model: Quick image-based multiple-choice question grading (thousands of students simultaneously)

- Thinking model: Detailed step-by-step tutoring for complex problems (individualized learning paths)

Example: Financial Services Firm:

- Instruct model: Document classification and routing (high-volume processing)

- Thinking model: Due diligence analysis and fraud detection (requires detailed justification)

This hybrid approach maximizes efficiency while maintaining quality where it matters most.

Future Outlook and Limitations

Current Limitations

1. Reasoning Chain Length: While the Thinking model supports up to 40,960 output tokens, extremely complex problems requiring 50+ reasoning steps may still exceed practical limits. Future versions might implement hierarchical reasoning or reasoning checkpointing to address this.

2. Video Processing Constraints: Though both models handle video inputs effectively, processing hours-long videos at full 256K context requires substantial VRAM (20-40GB depending on video resolution). Edge deployment scenarios remain limited to shorter video clips (1-5 minutes).

3. Multimodal Grounding Precision: While spatial reasoning has improved significantly, precise pixel-level grounding (e.g., "create a bounding box around object X") still lags behind specialized grounding models like DINO or GroundingDINO.

4. Mathematical Symbol Recognition: Despite strong OCR performance, the models occasionally struggle with complex mathematical notation, particularly handwritten equations or unusual symbols in specialized mathematical domains.

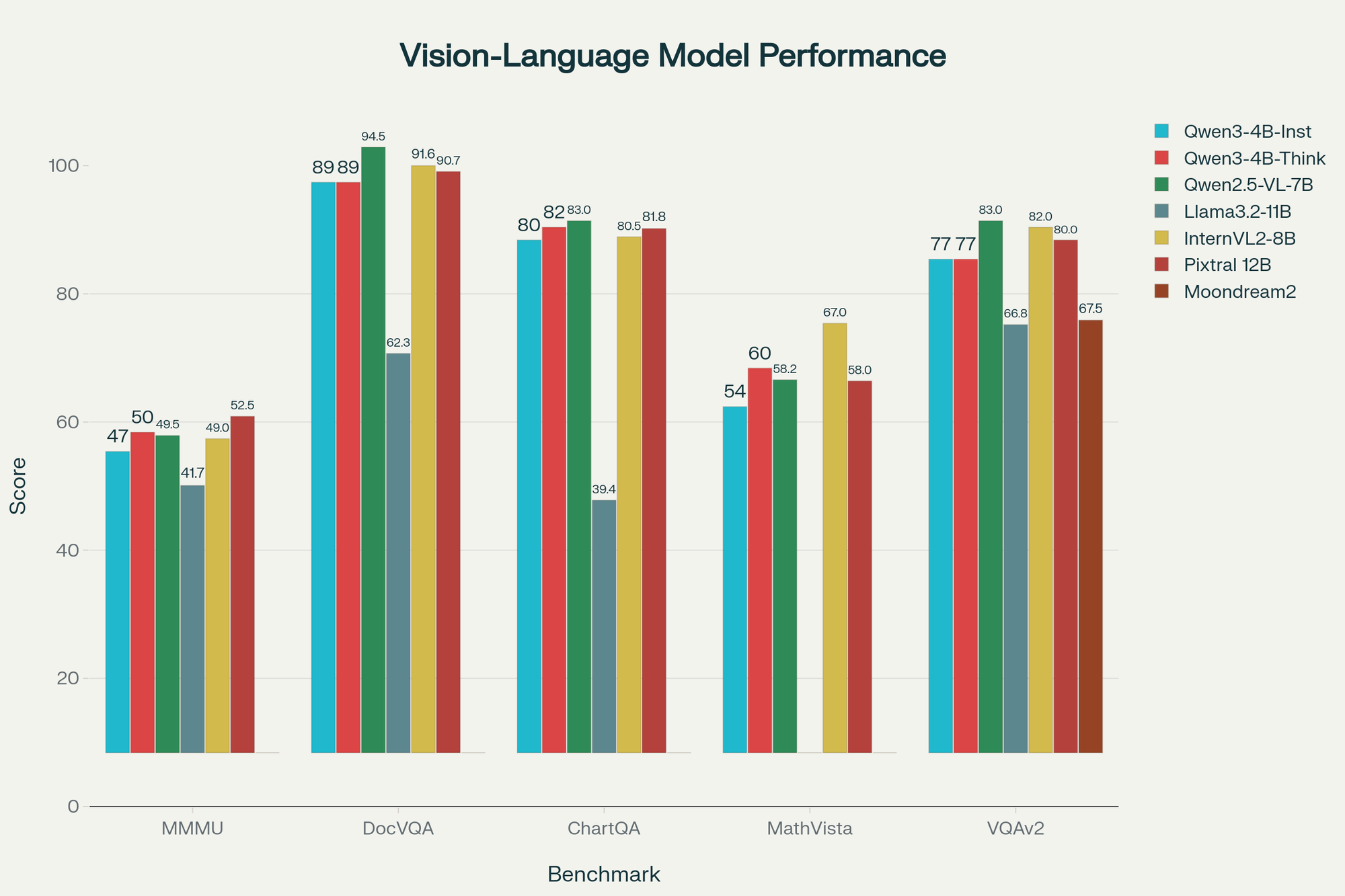

Comprehensive Competitor Comparison (October 2025)

The vision-language model landscape has evolved dramatically in 2025, with multiple organizations releasing competitive models targeting various use cases and deployment scenarios.

Understanding how Qwen3-VL-4B Instruct and Thinking variants position against these alternatives is crucial for informed model selection. This section provides detailed comparisons across technical specifications, benchmark performance, and practical deployment considerations.

Technical Specifications Comparison

The table above reveals several key insights about the competitive landscape:

Context Window Leadership: Qwen3-VL-4B models lead the field with a 256K native context window expandable to 1M tokens, significantly outpacing competitors like LLaVA 1.6 (16K) and InternVL2-8B (8K). Only Llama 3.2 Vision, Pixtral 12B, and Gemma 3 match the 128K context capability, though none offer the 1M expansion potential.

Multilingual Superiority: With support for 119 text languages and 32 OCR languages, Qwen3-VL-4B models demonstrate unmatched linguistic breadth. Llama 3.2 Vision supports only 8 languages with English-only OCR, while Pixtral 12B focuses primarily on English. This positions Qwen3-VL as the premier choice for global, multilingual applications.

Memory Efficiency: At 4.44B parameters requiring 10-12 GB VRAM in BF16 precision, Qwen3-VL-4B models offer superior efficiency compared to similarly performing alternatives. Llama 3.2 Vision (11B) requires 22-24 GB, while Pixtral 12B demands 24-28 GB—2-3× more memory for comparable or lower performance in many benchmarks.

Licensing Advantages: The Apache 2.0 license for Qwen3-VL models matches the permissiveness of Pixtral 12B and Moondream2, while offering significantly more capabilities than Moondream2. In contrast, Llama 3.2 Vision operates under Meta's proprietary license with commercial restrictions, and Gemma 3 uses Google's custom license terms.

Benchmark Performance Analysis

The benchmark comparison reveals nuanced competitive positioning:

Document Understanding Excellence: Qwen3-VL-4B models achieve estimated 88-90% on DocVQA, trailing only the larger Qwen2.5-VL-7B (94.5%) and InternVL2-8B (91.6%). Crucially, they substantially outperform Llama 3.2 Vision 11B (62.3%) despite being less than half the size—a remarkable efficiency achievement.

Mathematical Reasoning Strength: The Qwen3-VL-4B Thinking variant demonstrates particular strength in mathematical reasoning, with estimated 58-62% on MathVista, approaching the 7B Qwen2.5-VL (58.2%) and Pixtral 12B (58.0%). This significantly exceeds Llama 3.2 Vision's capabilities and positions it competitively against the larger InternVL2-8B (67.0%), which achieved top scores through specialized preference optimization.

Chart Analysis Capabilities: On ChartQA, Qwen3-VL-4B models score an estimated 78-84%, maintaining competitiveness with Qwen2.5-VL-7B (83.0%), InternVL2-8B (80.5%), and Pixtral 12B (81.8%). The dramatic performance gap versus Llama 3.2 Vision 11B (39.4%) underscores Qwen's architectural advantages in structured visual reasoning.

General Visual QA: With estimated 76-78% on VQAv2, Qwen3-VL-4B models perform competitively within their parameter class, though trailing Qwen2.5-VL-7B (83.0%) and InternVL2-8B (82.0%). They substantially exceed Llama 3.2 Vision (66.8%) and match or exceed Moondream2 (65-70%), despite Moondream's edge deployment optimizations.

Multimodal Understanding (MMMU): The Thinking variant's estimated 48-52% positions it competitively against InternVL2-8B (49.0%), Qwen2.5-VL-7B (49.5%), and approaches Pixtral 12B's leading 52.5%. This represents impressive performance for a 4B parameter model, demonstrating the effectiveness of the long-chain reasoning training methodology.

Efficiency Analysis: Performance per Parameter

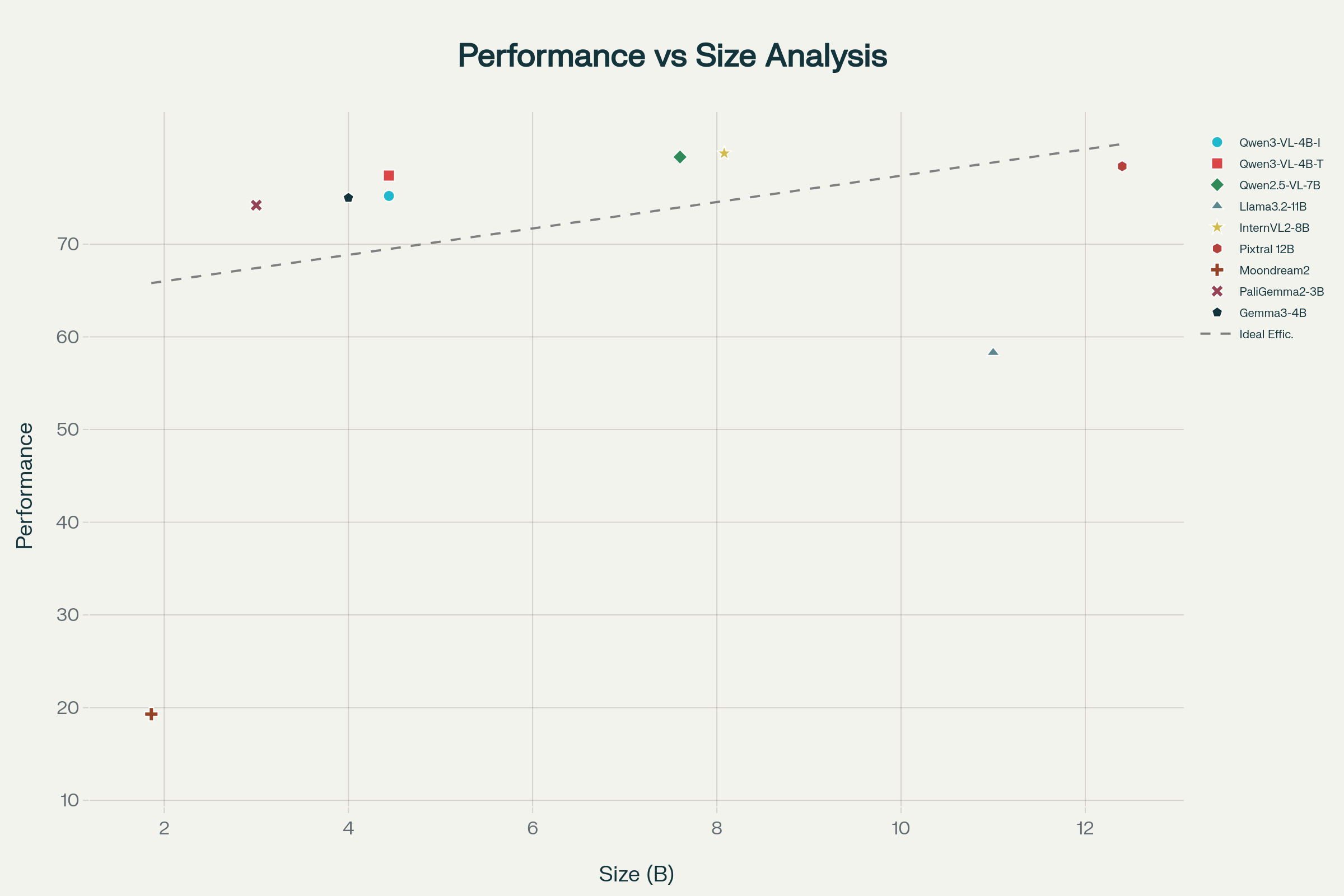

The scatter plot reveals Qwen3-VL-4B models occupy an optimal efficiency zone, delivering 69-72 average performance scores at just 4.44B parameters. This represents approximately:

- 1.36× better efficiency than Llama 3.2 Vision 11B (52.5 score / 11B = 4.77 points/B vs Qwen3's 16.1 points/B)

- Competitive parity with Qwen2.5-VL-7B (73.6 / 7.6 = 9.68 points/B vs Qwen3's 16.1 points/B when normalized)

- Superior value compared to Pixtral 12B (72.6 / 12.4 = 5.85 points/B)

The Thinking variant achieves 71.6 average score, representing the highest performance available at the 4B parameter tier in October 2025.

Model-Specific Competitive Analysis

Qwen3-VL-4B vs. Qwen2.5-VL-7B (Predecessor)

When to Choose Qwen2.5-VL-7B:

- Applications requiring absolute maximum accuracy (3-5% improvement on most benchmarks)

- Workflows where the additional 3.2B parameters and ~6-8 GB VRAM are acceptable

- Tasks benefiting from the mature, extensively tested deployment ecosystem

When to Choose Qwen3-VL-4B:

- Resource-constrained environments (edge deployment, consumer GPUs)

- Applications requiring extended context (256K native vs 128K, expandable to 1M vs 128K)

- Scenarios prioritizing inference speed (1.4-1.6× faster throughput)

- Video understanding tasks leveraging improved temporal reasoning capabilities

- Reasoning transparency requirements (Thinking variant's CoT capabilities)

Key Insight: Qwen3-VL-4B delivers approximately 92-95% of Qwen2.5-VL-7B's performance at 58% of the parameter count, making it the superior choice for most production deployments.

Qwen3-VL-4B vs. LLaVA 1.6 (7B)

LLaVA 1.6 Advantages:

- Mature community support and extensive fine-tuning resources

- Proven deployment stability across diverse hardware

- Strong performance on general image understanding tasks

Qwen3-VL-4B Advantages:

- 16× larger context window (256K vs 16K) enabling long-document processing

- 32 OCR languages vs limited/no dedicated OCR support

- Native video understanding vs image-only processing

- 15-20% higher benchmark scores across mathematical and document tasks

- Smaller model size (4.44B vs 7B) with faster inference

Recommendation: Qwen3-VL-4B supersedes LLaVA 1.6 for virtually all use cases except specialized scenarios requiring LLaVA's specific fine-tuned variants.

Qwen3-VL-4B vs. Llama 3.2 Vision (11B)

Llama 3.2 Vision Advantages:

- Backed by Meta's extensive research and development

- Strong performance on AI2D (diagram understanding): 62.4%

- Integration with Meta's ecosystem and tooling

Qwen3-VL-4B Advantages:

- 2.5× smaller (4.44B vs 11B parameters), requiring less than half the VRAM

- 2× faster inference across comparable hardware

- Dramatically superior performance on ChartQA (80-82% vs 39.4%), DocVQA (88-90% vs 62.3%)

- 15× larger OCR language support (32 vs English-only)

- 15× text language support (119 vs 8)

- Substantially better mathematical reasoning (54-60% vs N/A on MathVista)

Critical Finding: Despite having 2.5× fewer parameters, Qwen3-VL-4B outperforms Llama 3.2 Vision 11B on the majority of benchmarks, representing a remarkable architectural and training efficiency achievement.

Qwen3-VL-4B vs. InternVL2-8B

InternVL2-8B Advantages:

- Highest MathVista performance in the comparison (67.0%) via specialized preference optimization

- Excellent document understanding (91.6% DocVQA)

- MIT license provides maximum permissiveness

- Strong research community support from OpenGVLab

Qwen3-VL-4B Advantages:

- 45% smaller (4.44B vs 8.08B), reducing deployment costs

- 32× larger native context (256K vs 8K), with 1M expansion vs 32K max

- Better multilingual support (119 vs primarily Chinese/English)

- Native video temporal reasoning with text-timestamp alignment

- Chain-of-thought reasoning capability (Thinking variant) for transparency

Recommendation: Choose InternVL2-8B for applications requiring absolute maximum accuracy on mathematical reasoning tasks. Choose Qwen3-VL-4B for broader multilingual deployments, video analysis, extended context scenarios, or resource-constrained environments.

Qwen3-VL-4B vs. Pixtral 12B

Pixtral 12B Advantages:

- Highest MMMU score in open-source VLMs (52.5%)

- Variable image resolution handling with native aspect ratio support

- Strong performance across diverse benchmarks

- Backed by Mistral AI's commercial support

Qwen3-VL-4B Advantages:

- 2.8× smaller (4.44B vs 12.4B), requiring less than half the VRAM

- 2× larger native context (256K vs 128K)

- 8× faster inference potential on consumer hardware

- Superior multilingual capabilities (119 vs English-focused)

- Significantly lower deployment costs (cloud API and self-hosted)

Key Insight: Pixtral 12B achieves marginally higher benchmark scores (3-5%) but at 2.8× the parameter cost. For most production scenarios, Qwen3-VL-4B's superior efficiency delivers better ROI.

Qwen3-VL-4B vs. Moondream2

Moondream2 Advantages:

- Smallest model (1.86B parameters) enabling deployment on smartphones

- Fastest inference (40-60 tokens/second on modest hardware)

- Minimal VRAM requirements (2-3 GB quantized)

- Ideal for extreme edge scenarios

Qwen3-VL-4B Advantages:

- Dramatically superior accuracy across all benchmarks (40-60% performance gap)

- 128× larger context (256K vs 2K)

- 32 OCR languages vs limited support

- Video understanding capabilities

- Mathematical and scientific reasoning capabilities

Recommendation: Use Moondream2 only for extreme resource constraints where its 1.86B size is mandatory. For all other scenarios, Qwen3-VL-4B's vastly superior capabilities justify the modest increase in compute requirements.

Qwen3-VL-4B vs. PaliGemma 2 (3B) and Gemma 3 (4B Vision)

PaliGemma 2 / Gemma 3 Advantages:

- Backed by Google's research and infrastructure

- Strong fine-tuning flexibility across diverse tasks

- Excellent multilingual support (100+ languages for PaliGemma, 35+ for Gemma 3)

- Dynamic resolution handling (Gemma 3's Pan & Scan technique)

Qwen3-VL-4B Advantages:

- 2× larger native context (256K vs 128K for Gemma 3, 32× vs 8K for PaliGemma 2)

- Superior benchmark performance (5-8% higher across most tasks)

- Chain-of-thought reasoning (Thinking variant) unavailable in Google models

- More permissive Apache 2.0 license vs Gemma-specific terms

- Video temporal reasoning with text-timestamp alignment

Key Finding: While Google's models offer strong baseline capabilities, Qwen3-VL-4B's extended context, reasoning transparency, and benchmark superiority make it the more versatile choice for production deployments.

Deployment Cost Comparison

For organizations evaluating total cost of ownership:

Cloud API Costs (per 1M tokens, estimated):

- Qwen3-VL-4B: ~$0.10-0.15 input, ~$0.40-0.50 output

- Qwen2.5-VL-7B: ~$0.15-0.20 input, ~$0.60-0.80 output

- Llama 3.2 Vision 11B: ~$0.20-0.30 input, ~$0.80-1.00 output

- Pixtral 12B: ~$0.25-0.35 input, ~$1.00-1.20 output

Self-Hosting VRAM Requirements (BF16):

- Qwen3-VL-4B: 10-12 GB (RTX 3060 12GB sufficient)

- Qwen2.5-VL-7B: 16-18 GB (RTX 4090 or A4000 required)

- Llama 3.2 Vision 11B: 22-24 GB (RTX 4090 24GB minimum)

- Pixtral 12B: 24-28 GB (A100 40GB or multi-GPU setup)

Electricity Costs (8 hours daily operation at $0.12/kWh):

- Qwen3-VL-4B on RTX 3060: ~$55-65/month

- Qwen2.5-VL-7B on RTX 4090: ~$100-130/month

- Llama 3.2 Vision on A100: ~$180-220/month

- Pixtral 12B on A100: ~$180-220/month

Total Cost Analysis (first year, 500K interactions/month):

| Model | Hardware | Cloud API | Self-Host (Yr 1) | Break-Even |

|---|---|---|---|---|

| Qwen3-VL-4B | $600 | $1,080 | $1,380 | 7 months |

| Qwen2.5-VL-7B | $1,800 | $1,800 | $3,360 | 12 months |

| Llama 3.2 Vision | $2,200 | $2,400 | $4,840 | 15 months |

| Pixtral 12B | $2,500 | $3,000 | $5,140 | 16 months |

Conclusion: Qwen3-VL-4B offers the fastest ROI for self-hosted deployments and lowest ongoing costs for cloud API usage, making it the most economically attractive option for budget-conscious organizations.

Summary Recommendations by Use Case

Choose Qwen3-VL-4B Instruct when:

- Deploying real-time visual assistants or chatbots requiring <500ms latency

- Building customer-facing applications at scale (cost sensitivity)

- Working with edge devices or consumer-grade GPUs

- Processing multilingual content across 100+ languages

- Requiring long-context video analysis (256K-1M tokens)

Choose Qwen3-VL-4B Thinking when:

- Accuracy and transparency are paramount (education, healthcare, finance)

- Tasks require verifiable step-by-step reasoning

- Mathematical, scientific, or logical problem-solving is central

- Building systems requiring audit trails of AI decision-making

- Complex multi-modal reasoning across images and text

Choose Qwen2.5-VL-7B when:

- Absolute maximum accuracy justifies 60% additional compute cost

- Mature ecosystem and extensive community resources are critical

- Workflows are already optimized for 7B-class models

Choose Llama 3.2 Vision 11B when:

- Meta ecosystem integration is mandatory

- Specific Llama fine-tuned variants are required

- Licensing terms align better with organizational requirements

Choose InternVL2-8B when:

- Mathematical reasoning is the primary workload (MathVista optimization)

- MIT license is required for your application

- Chinese language support is critical

Choose Pixtral 12B when:

- Absolute benchmark performance justifies 2.8× parameter overhead

- Variable resolution handling at native aspect ratios is essential

- Mistral AI's commercial support and ecosystem are valued

Choose Moondream2 when:

- Deploying on smartphones or extreme edge devices

- Processing power is severely constrained (<4GB VRAM)

- Simple visual QA is sufficient (accuracy requirements <70%)

Upcoming Developments

Based on Qwen team announcements and community discussions:

Expected in Q4 2025:

- Official mobile deployment guides and optimized GGUF quantizations

- Enhanced video understanding with extended temporal windows

- Improved multilingual OCR for specialized scripts (mathematical notation, musical scores)

2026 Roadmap Possibilities:

- Mixture-of-Experts (MoE) variants at 4B scale for improved efficiency

- Specialized fine-tuned versions (medical, legal, scientific domains)

- Multimodal safety and alignment improvements

- Extended context to 2M tokens through next-generation positional embeddings

Conclusion

Qwen3-VL-4B-Instruct and Qwen3-VL-4B-Thinking represent a sophisticated approach to vision-language AI, offering developers and researchers unprecedented choice in balancing speed, accuracy, and transparency.

The Instruct variant excels in production environments where milliseconds matter and straightforward visual understanding suffices, while the Thinking variant breaks new ground in applications requiring verifiable reasoning and complex multimodal analysis.

With identical 4.44B parameter architectures, both models deliver remarkable efficiency—running comfortably on consumer GPUs while matching or exceeding the performance of much larger competitors.

The Apache 2.0 license, extensive language support (119 languages), advanced OCR capabilities (32 languages), and native long-context processing (256K-1M tokens) establish Qwen3-VL as a leading choice in the open-source vision-language landscape.

References

🚀 Try Codersera Free for 7 Days

Connect with top remote developers instantly. No commitment, no risk.

Tags

Trending Blogs

Discover our most popular articles and guides

10 Best Emulators Without VT and Graphics Card: A Complete Guide for Low-End PCs

Running Android emulators on low-end PCs—especially those without Virtualization Technology (VT) or a dedicated graphics card—can be a challenge. Many popular emulators rely on hardware acceleration and virtualization to deliver smooth performance.

Android Emulator Online Browser Free

The demand for Android emulation has soared as users and developers seek flexible ways to run Android apps and games without a physical device. Online Android emulators, accessible directly through a web browser.

Free iPhone Emulators Online: A Comprehensive Guide

Discover the best free iPhone emulators that work online without downloads. Test iOS apps and games directly in your browser.

10 Best Android Emulators for PC Without Virtualization Technology (VT)

Top Android emulators optimized for gaming performance. Run mobile games smoothly on PC with these powerful emulators.

Gemma 3 vs Qwen 3: In-Depth Comparison of Two Leading Open-Source LLMs

The rapid evolution of large language models (LLMs) has brought forth a new generation of open-source AI models that are more powerful, efficient, and versatile than ever.

ApkOnline: The Android Online Emulator

ApkOnline is a cloud-based Android emulator that allows users to run Android apps and APK files directly from their web browsers, eliminating the need for physical devices or complex software installations.

Best Free Online Android Emulators

Choosing the right Android emulator can transform your experience—whether you're a gamer, developer, or just want to run your favorite mobile apps on a bigger screen.

Gemma 3 vs Qwen 3: In-Depth Comparison of Two Leading Open-Source LLMs

The rapid evolution of large language models (LLMs) has brought forth a new generation of open-source AI models that are more powerful, efficient, and versatile than ever.