3 min to read

Alibaba Wan 2.1 vs Google Veo 2 vs OpenAI Sora: Best Video Generation Model?

The field of video generation has seen remarkable advancements with the emergence of sophisticated AI models. Among the most notable are Alibaba's Wan 2.1, Google's Veo 2, and OpenAI's Sora — each garnering attention for their capabilities in generating high-quality videos. This article provides a comprehensive comparison of these models, focusing on their architectures, functionalities, performance benchmarks, and potential applications. Overview of Each Model Alibaba Wan 2.1 * Architect

The field of video generation has seen remarkable advancements with the emergence of sophisticated AI models. Among the most notable are Alibaba's Wan 2.1, Google's Veo 2, and OpenAI's Sora — each garnering attention for their capabilities in generating high-quality videos.

This article provides a comprehensive comparison of these models, focusing on their architectures, functionalities, performance benchmarks, and potential applications.

Overview of Each Model

Alibaba Wan 2.1

- Architecture and Features: Wan 2.1 employs a spatio-temporal Variational Autoencoder (VAE) architecture, enabling it to reconstruct videos 2.5 times faster than its competitors. It supports text-to-video, image-to-video, and video editing capabilities, with output resolutions of 480P and 720P.

- Training Data: Wan 2.1 is trained on a massive dataset comprising 1.5 billion videos and 10 billion images, excelling in motion smoothness and temporal consistency.

- Variants: It includes models like Wan2.1-I2V-14B for image-to-video synthesis and Wan2.1-T2V-1.3B for text-to-video tasks, optimized for consumer-grade GPUs.

Google Veo 2

- Architecture and Features: While detailed information about Veo 2’s architecture is limited, Google’s models often leverage large-scale transformer-based architectures for video understanding and generation.

- Training Data: Google’s models typically draw from extensive datasets, supported by the company's vast data resources.

- Variants: Specific variants of Veo 2 are not widely disclosed, but Google generally provides models tailored for varying computational needs.

OpenAI Sora

- Architecture and Features: Sora uses a sophisticated architecture designed for efficient video generation, though it is reportedly outperformed by Wan 2.1 in certain benchmarks.

- Training Data: OpenAI has not publicly disclosed specific details about Sora’s training data.

- Variants: OpenAI often releases models with varying parameter sizes to accommodate different computational requirements.

Technical Comparison

Architecture

| Model | Architecture | Key Features |

|---|---|---|

| Wan 2.1 | Spatio-temporal VAE | Fast video reconstruction, supports text-to-video, image-to-video, and video editing. |

| Google Veo 2 | Transformer-based (assumed) | Likely leverages large-scale transformer architectures for video tasks. |

| OpenAI Sora | Sophisticated architecture | Efficient video generation with less disclosed technical detail. |

Performance Benchmarks

- Wan 2.1: Surpasses OpenAI's Sora in motion smoothness and temporal consistency, and is the first model to support text effects in both Chinese and English.

- Google Veo 2: Limited publicly available benchmark data, but Google’s models are generally competitive in AI tasks.

- OpenAI Sora: Outperformed by Wan 2.1 in several performance metrics, suggesting room for optimization.

Training Data

| Model | Training Data |

|---|---|

| Wan 2.1 | 1.5 billion videos, 10 billion images |

| Google Veo 2 | Extensive datasets (details not available) |

| OpenAI Sora | Large datasets (details not disclosed) |

Functionalities and Applications

Video Generation Capabilities

- Wan 2.1: Supports text-to-video, image-to-video, and video editing with high-quality outputs at 480P and 720P resolutions. It can simulate real-world physics and object interactions.

- Google Veo 2: Expected to offer similar functionalities, though specific information remains scarce.

- OpenAI Sora: Prioritizes efficient video generation but with less publicly available information on specific features.

Accessibility and Democratization

- Wan 2.1: The Wan2.1-T2V-1.3B model runs on consumer-grade GPUs like the RTX 4090, making high-quality video generation accessible to a broader audience.

- Google Veo 2: Likely requires more computational resources, which could limit accessibility for smaller setups.

- OpenAI Sora: Closed-source nature restricts accessibility compared to open-source alternatives like Wan 2.1.

Ethical Considerations and Future Directions

Ethical Implications

The rapid development of advanced video generation models raises ethical concerns, particularly around misinformation and deepfakes. Ensuring responsible use and development of these technologies is crucial.

Future Directions

- Advancements in Architecture: Future models may integrate more sophisticated architectures that boost performance while reducing computational demands.

- Increased Accessibility: Open-source models like Wan 2.1 are expected to drive democratization of video generation technology.

- Ethical Frameworks: Establishing comprehensive ethical guidelines will help mitigate misuse and promote positive innovation.

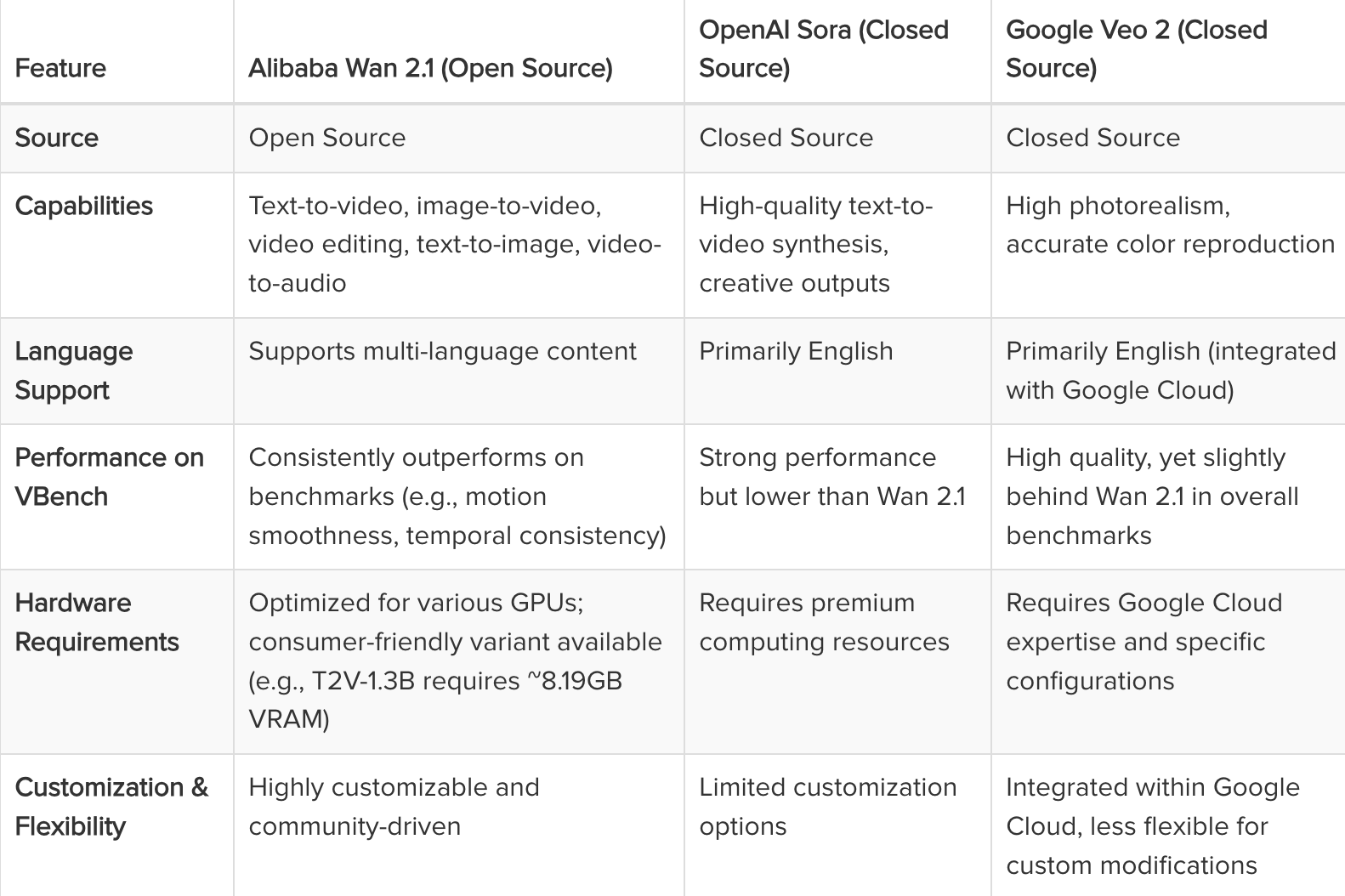

Comparison

Conclusion

Alibaba's Wan 2.1, Google's Veo 2, and OpenAI's Sora represent significant milestones in video generation technology. Wan 2.1 stands out due to its open-source model, faster video reconstruction, and support for multilingual text prompts.

While Veo 2 and Sora offer competitive capabilities, Wan 2.1’s accessibility and performance make it a compelling choice for diverse applications.

References

🚀 Try Codersera Free for 7 Days

Connect with top remote developers instantly. No commitment, no risk.

Tags

Trending Blogs

Discover our most popular articles and guides

10 Best Emulators Without VT and Graphics Card: A Complete Guide for Low-End PCs

Running Android emulators on low-end PCs—especially those without Virtualization Technology (VT) or a dedicated graphics card—can be a challenge. Many popular emulators rely on hardware acceleration and virtualization to deliver smooth performance.

Android Emulator Online Browser Free

The demand for Android emulation has soared as users and developers seek flexible ways to run Android apps and games without a physical device. Online Android emulators, accessible directly through a web browser.

Free iPhone Emulators Online: A Comprehensive Guide

Discover the best free iPhone emulators that work online without downloads. Test iOS apps and games directly in your browser.

10 Best Android Emulators for PC Without Virtualization Technology (VT)

Top Android emulators optimized for gaming performance. Run mobile games smoothly on PC with these powerful emulators.

Gemma 3 vs Qwen 3: In-Depth Comparison of Two Leading Open-Source LLMs

The rapid evolution of large language models (LLMs) has brought forth a new generation of open-source AI models that are more powerful, efficient, and versatile than ever.

ApkOnline: The Android Online Emulator

ApkOnline is a cloud-based Android emulator that allows users to run Android apps and APK files directly from their web browsers, eliminating the need for physical devices or complex software installations.

Best Free Online Android Emulators

Choosing the right Android emulator can transform your experience—whether you're a gamer, developer, or just want to run your favorite mobile apps on a bigger screen.

Gemma 3 vs Qwen 3: In-Depth Comparison of Two Leading Open-Source LLMs

The rapid evolution of large language models (LLMs) has brought forth a new generation of open-source AI models that are more powerful, efficient, and versatile than ever.